Open-Source AI Models

OpenAI has launched GPT-OSS-120B and GPT-OSS-20B, its first open-source AI models in six years. Designed for advanced reasoning and local deployment, these models are licensed under Apache 2.0. They offer developers powerful tools for customization and cost-effective AI solutions. CEO Sam Altman emphasized their role in democratizing AI access for global innovation.

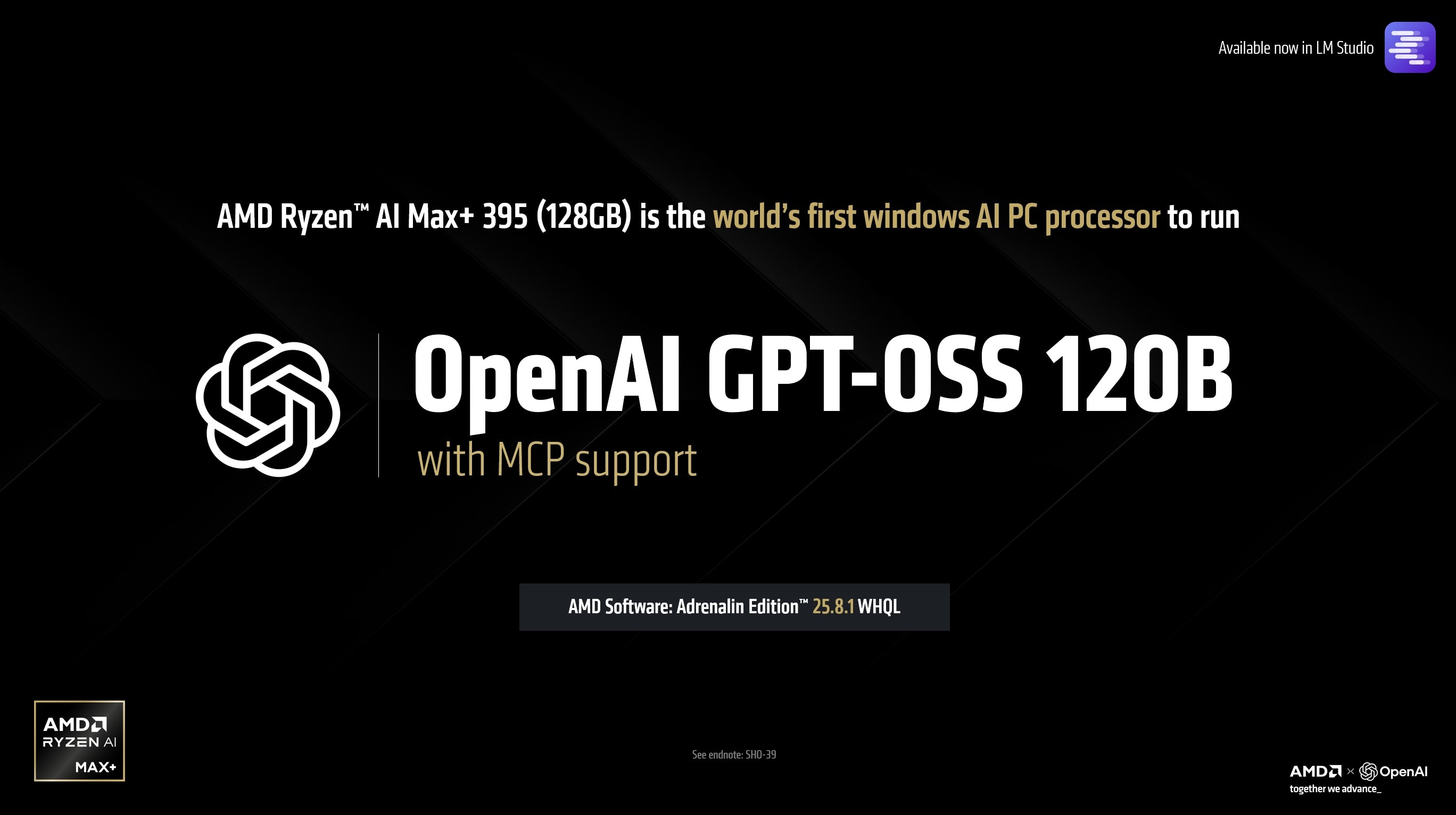

Image: AMD

OpenAI’s Bold Return to Open-Source Roots

On August 5, 2025, OpenAI released GPT-OSS-120B and GPT-OSS-20B—its first open-source models since GPT-2 in 2019. Available for free on Hugging Face, these models mark a major shift toward openness and community-led development. GPT-OSS-120B has 117 billion parameters, while GPT-OSS-20B includes 21 billion.

Both models use a Mixture-of-Experts (MoE) architecture, which activates only 5.1B and 3.6B parameters per token, respectively. This setup boosts efficiency without sacrificing performance. Sam Altman stated that OpenAI aims to place cutting-edge AI in the hands of developers globally. As a result, innovation can flourish without reliance on expensive cloud setups.

Performance and Capabilities

The models excel in reasoning, coding, and general knowledge. GPT-OSS-120B rivals the performance of OpenAI’s proprietary o4-mini on benchmarks such as Codeforces and AIME 2025. In contrast, GPT-OSS-20B is comparable to o3-mini and can run efficiently on consumer-grade hardware. Devices with 16GB RAM, including laptops and Snapdragon-based smartphones, support it well.

Each model handles a 128,000-token context length, which suits large documents and complex tasks. Furthermore, they support chain-of-thought reasoning, web browsing, and Python code execution. However, they are text-only and lack image processing or other multimodal functions.

Safety and Customization

OpenAI focused heavily on safety throughout the development process. They tested the models extensively to ensure they stay below critical risk thresholds, especially in cybersecurity and biology. Even when fine-tuned for adversarial use, the models remain safe.

Thanks to the Apache 2.0 license, developers can freely modify and use these models. Whether deploying locally on AMD Ryzen AI chips or scaling on AWS and Azure, the flexibility is broad. OpenAI also collaborated with NVIDIA, Qualcomm, and Microsoft. On NVIDIA’s RTX 5090 GPU, GPT-OSS-120B achieves speeds of up to 256 tokens per second.

Read More..- Tata Launches Harrier & Safari Adventure X from ₹18.99 Lakh

Impact on the AI Landscape

This release disrupts the open-weight model space, long dominated by Meta, Mistral, and DeepSeek. OpenAI positions itself as a competitive force by offering affordable, high-performance alternatives. Altman emphasized the strategic importance of this move, especially given rising competition from Chinese AI firms.

However, the models show higher hallucination rates—49% for GPT-OSS-120B and 53% for GPT-OSS-20B on PersonQA. These figures are notably higher than o1’s 16%, signaling a clear area for improvement.

Despite this, developers are adopting the models rapidly across sectors like healthcare, education, and software development. Their open nature and adaptability may reshape the open-source AI space, sparking a new wave of innovation.